You can also specify the meta data for a stage in a plain text file known as a schema file.This is not stored in the DataStage Repository but you could,for example,keep it in a document management or source code control system, or publish it on an intranet site.

The format of schema files is described in Appendix A of this manual.

Note:If you are using a schema file on an NLS system,the schema file needs to be in UTF-8 format.It is,however,easy to convert text files between two different maps with a DataStage job.Such a job would read data from a text file using a Sequential File stage and specifying the appropriate character set on the NLS Map page. It would write the data to another file using a Sequential File stage, specifying the UTF-8 map on the NLS Map page.Some parallel job stages allow you to use a partial schema. This means that you only need define column definitions for those columns that you are actually going to operate on. Partial schemas are also described below.

Remember that you should turn runtime column propagation on if you intend to use schema files to define column meta data.

Data Types

Schemas are an alternative way for you to specify column definitions for the data used by parallel jobs. By default, most parallel job stages take their meta data from the Columns tab, which contains table definitions, supplemented,where necessary by format information from the Format tab. For some stages, you can specify a property that causes the stage to take its meta data from the specified schema file instead. Some stages also allow you to specify a partial schema. This allows you to describe only those columns that a particular stage is processing and ignore the rest. The schema file is a plain text file, this appendix describes its format.A partial schema has the same format.

Note: If you are using a schema file on an NLS system, the schema file needs to be in UTF-8 format. It is, however, easy to convert text files between two different maps with a DataStage job. Such a job would read data from a text file using a Sequential File stage and specifying the appropriate character set on the NLS Map page. It would write the data to another file using a Sequential File stage, specifying the UTF-8 map on the NLS Map page. Schema Format A schema contains a record (or row) definition. This describes each column (or field) that will be encountered within the record, giving column name and data type. The following is an example record schema:

record (

name:string[255];

address:nullable string[255];

value1:int32;

A-2 DataStage Enterprise Edition Parallel Job Developer’s Guide

value2:int32

date:date)

(The line breaks are there for ease of reading, you would omit these if you

were defining a partial schema, for example

record(name:string[255];value1:int32;date:date)is a valid

schema.)

The format of each line describing a column is:

column_name:[nullability]datatype;

• column_name. This is the name that identifies the column. Names

must start with a letter or an underscore (_), and can contain only

alphanumeric or underscore characters. The name is not case sensitive.

The name can be of any length.

• nullability. You can optionally specify whether a column is allowed

to contain a null value, or whether this would be viewed as invalid.

If the column can be null, insert the word ’nullable’. By default

columns are not nullable.

You can also include ’nullable’ at record level to specify that all

columns are nullable, then override the setting for individual

columns by specifying ‘not nullable’. For example:

record nullable (

name:not nullable string[255];

value1:int32;

date:date)

• datatype. This is the data type of the column. This uses the internal

data types as described on page 2-32, not SQL data types as used

on Columns tabs in stage editors.

You can include comments in schema definition files. A comment is

started by a double slash //, and ended by a newline.

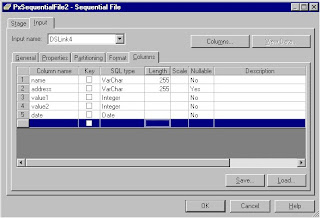

The example schema corresponds to the following table definition as specified

on a Columns tab of a stage editor:

The following sections give special consideration for representing various

data types in a schema file.

Date Columns

The following examples show various different data definitions:

record (dateField1:date; ) // single date

record (dateField2[10]:date; ) // 10-element date vector

record (dateField3[]:date; ) // variable-length date vector

record (dateField4:nullable date;) // nullable date

(See “Complex Data Types” on page 2-36 for information about vectors.)

Decimal Columns

To define a record field with data type decimal, you must specify the

column’s precision, and you may optionally specify its scale, as follows:

column_name:decimal[ precision, scale];

where precision is greater than or equal 1 and scale is greater than or equal to 0 and less than precision. If the scale is not specified, it defaults to zero, indicating an integer value.

The following examples show different decimal column definitions:

record (dField1:decimal[12]; ) // 12-digit integer

record (dField2[10]:decimal[15,3]; )// 10-element

//decimal vector

record (dField3:nullable decimal[15,3];) // nullable decimal

Floating-Point Columns To define floating-point fields, you use the sfloat (single-precision) or

dfloat (double-precision) data type, as in the following examples:

record (aSingle:sfloat; aDouble:dfloat; ) // float definitions

record (aSingle: nullable sfloat;) // nullable sfloat

record (doubles[5]:dfloat;) // fixed-length vector of dfloats

record (singles[]:sfloat;) // variable-length vector of sfloats

Integer Columns

To define integer fields, you use an 8-, 16-, 32-, or 64-bit integer data type

(signed or unsigned), as shown in the following examples:

record (n:int32;) // 32-bit signed integer

record (n:nullable int64;) // nullable, 64-bit signed integer

record (n[10]:int16;) // fixed-length vector of 16-bit

//signed integer

record (n[]:uint8;) // variable-length vector of 8-bit unsigned

//int

Raw Columns

You can define a record field that is a collection of untyped bytes, of fixed or Variable length. You give the field data type raw. The definition for a raw field is similar to that of a string field, as shown in the following examples:

record (var1:raw[];) // variable-length raw field

record (var2:raw;) // variable-length raw field; same as raw[]

record (var3:raw[40];) // fixed-length raw field

record (var4[5]:raw[40];)// fixed-length vector of raw fields

You can specify the maximum number of bytes allowed in the raw field with the optional property max, as shown in the example below:

Schemas A-5

record (var7:raw[max=80];)

The length of a fixed-length raw field must be at least 1.

String Columns

You can define string fields of fixed or variable length. For variable-length strings, the string length is stored as part of the string as a hidden integer.The storage used to hold the string length is not included in the length of

the string.

The following examples show string field definitions:

record (var1:string[];) // variable-length string

record (var2:string;) // variable-length string; same as string[]

record (var3:string[80];) // fixed-length string of 80 bytes

record (var4:nullable string[80];) // nullable string

record (var5[10]:string;) // fixed-length vector of strings

record (var6[]:string[80];) // variable-length vector of strings

You can specify the maximum length of a string with the optional property max, as shown in the example below:

record (var7:string[max=80];)

The length of a fixed-length string must be at least 1.

Time Columns

By default, the smallest unit of measure for a time value is seconds, but you can instead use microseconds with the [microseconds] option. The following are examples of time field definitions:

record (tField1:time; ) // single time field in seconds

record (tField2:time[microseconds];)// time field in

//microseconds

record (tField3[]:time; ) // variable-length time vector

record (tField4:nullable time;) // nullable time

Timestamp Columns

Timestamp fields contain both time and date information. In the time portion, you can use seconds (the default) or microseconds for the smallest unit of measure. For example:

record (tsField1:timestamp;)// single timestamp field in //seconds

record (tsField2:timestamp[microseconds];)// timestamp in //microseconds

record (tsField3[15]:timestamp; )// fixed-length timestamp //vector

record (tsField4:nullable timestamp;)// nullable timestamp Vectors

Many of the previous examples show how to define a vector of a particular data type. You define a vector field by following the column name with brackets []. For a variable-length vector, you leave the brackets empty, and for a fixed-length vector you put the number of vector elements in the brackets. For example, to define a variable-length vector of int32, you would use a field definition such as the following one:

intVec[]:int32;

To define a fixed-length vector of 10 elements of type sfloat, you would use a definition such as:

sfloatVec[10]:sfloat;

You can define a vector of any data type, including string and raw. You cannot define a vector of a vector or tagged type. You can, however, define a vector of type subrecord, and you can define that subrecord includes a tagged column or a vector. You can make vector elements nullable, as shown in the following record definition:

record (vInt[]:nullable int32;

vDate[6]:nullable date; )

In the example above, every element of the variable-length vector vInt will be nullable, as will every element of fixed-length vector vDate. To test whether a vector of nullable elements contains no data, you must check each element for null.

Subrecords

Record schemas let you define nested field definitions, or subrecords, by

specifying the type subrec. A subrecord itself does not define any storage;

instead, the fields of the subrecord define storage. The fields in a subrecord

can be of any data type, including tagged.

The following example defines a record that contains a subrecord:

record ( intField:int16;

aSubrec:subrec (

aField:int16;

Schemas A-7

bField:sfloat; );

)

In this example, the record contains a 16-bit integer field, intField, and a

subrecord field, aSubrec. The subrecord includes two fields: a 16-bit

integer and a single-precision float.

Subrecord columns of value data types (including string and raw) can be

nullable, and subrecord columns of subrec or vector types can have

nullable elements. A subrecord itself cannot be nullable.

You can define vectors (fixed-length or variable-length) of subrecords. The

following example shows a definition of a fixed-length vector of

subrecords:

record (aSubrec[10]:subrec (

aField:int16;

bField:sfloat; );

)

You can also nest subrecords and vectors of subrecords, to any depth of

nesting. The following example defines a fixed-length vector of

subrecords, aSubrec, that contains a nested variable-length vector of

subrecords, cSubrec:

record (aSubrec[10]:subrec (

aField:int16;

bField:sfloat;

cSubrec[]:subrec (

cAField:uint8;

cBField:dfloat; );

);

)

Subrecords can include tagged aggregate fields, as shown in the following

sample definition:

record (aSubrec:subrec (

aField:string;

bField:int32;

cField:tagged (

dField:int16;

eField:sfloat;

);

);

)

In this example, aSubrec has a string field, an int32 field, and a tagged

aggregate field. The tagged aggregate field cField can have either of two

data types, int16 or sfloat.

A-8 DataStage Enterprise Edition Parallel Job Developer’s Guide

Tagged Columns

You can use schemas to define tagged columns (similar to C unions), with

the data type tagged. Defining a record with a tagged type allows each

record of a data set to have a different data type for the tagged column.

When your application writes to a field in a tagged column, DataStage

updates the tag, which identifies it as having the type of the column that

is referenced.

The data type of a tagged columns can be of any data type except tagged

or subrec. For example, the following record defines a tagged subrecord

field:

record ( tagField:tagged (

aField:string;

bField:int32;

cField:sfloat;

) ;

)

In the example above, the data type of tagField can be one of following:

a variable-length string, an int32, or an sfloat.

Partial Schemas

Some parallel job stages allow you to use a partial schema. This means that

you only need define column definitions for those columns that you are

actually going to operate on. The stages that allow you to do this are file

stages that have a Format tab. These are:

• Sequential File stage

• File Set stage

• External Source stage

• External Target stage

• Column Import stage

You specify a partial schema using the Intact property on the Format tab

of the stage together with the Schema File property on the corresponding

Properties tab. To use this facility, you need to turn Runtime Column

Propagation on, and provide enough information about the columns

being passed through to enable DataStage to skip over them as necessary.

In the file defining the partial schema, you need to describe the record and

the individual columns. Describe the record as follows:

• intact. This property specifies that the schema being defined is a

partial one. You can optionally specify a name for the intact schema

here as well, which you can then reference from the Intact property

of the Format tab.

• record_length. The length of the record, including record delimiter

characters.

• record_delim_string. String giving the record delimiter as an

ASCII string in single quotes. (For a single character delimiter, use

record_delim and supply a single ASCII character in single

quotes).

Describe the columns as follows:

• position. The position of the starting character within the record.

• delim. The column trailing delimiter, can be any of the following:

– ws to skip all standard whitespace characters (space, tab, and

newline) trailing after a field.

– end to specify that the last field in the record is composed of all

remaining bytes until the end of the record.

– none to specify that fields have no delimiter.

A-10 DataStage Enterprise Edition Parallel Job Developer’s Guide

– null to specify that the delimiter is the ASCII null character.

– ASCII_char specifies a single ASCII delimiter. Enclose ASCII_char

in single quotation marks. (To specify multiple ASCII characters,

use delim_string followed by the string in single quotes.)

• text specifies the data representation type of a field as being text

rather than binary. Data is formatted as text by default. (Specify

binary if data is binary.)

Columns that are being passed through intact only need to be described in

enough detail to allow DataStage to skip them and locate the columns that

are to be operated on.

For example, say you have a sequential file defining rows comprising six

fixed width columns, and you are in interested in the last two. You know

that the first four columns together contain 80 characters. Your partial

schema definition might appear as follows:

record { intact=details, record_delim_string = '\r\n' }

( colstoignore: string [80]

name: string [20] { delim=none };

income: uint32 {delim = “,”, text };

Your stage would not be able to alter anything in a row other than the

name and income columns (it could also add a new column to either the

beginning or the end of a row).

-----------------------------------------------------------------------

Complex Data Types

Parallel jobs support three complex data types:

• Subrecords

• Tagged subrecords

• Vectors

When referring to complex data in DataStage column definitions, you can

specify fully qualified column names, for example:

Parent.Child5.Grandchild2

Subrecords

A subrecord is a nested data structure. The column with type subrecord

does not itself define any storage, but the columns it contains do. These

columns can have any data type, and you can nest subrecords one within

another. The LEVEL property is used to specify the structure of

No comments:

Post a Comment