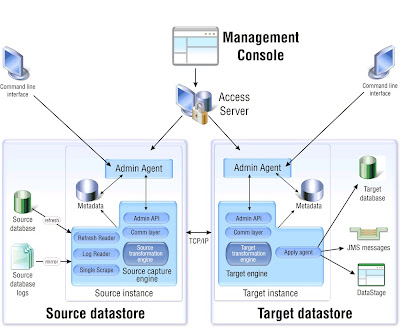

The key components of the InfoSphere CDC architecture are described below:

Access Server—Controls all of the non-command line access to the replication environment. When you log in to Management Console, you are connecting to Access Server. Access Server can be closed on the client workstation without affecting active data replication activities between source and target servers.

Admin API—Operates as an optional Java-based programming interface that you can use to script operational configurations or interactions.

Apply agent—Acts as the agent on the target that processes changes as sent by the source.

Command line interface—Allows you to administer datastores and user accounts, as well as to perform administration scripting, independent of Management Console.

Communication Layer (TCP/IP)—Acts as the dedicated network connection between the Source and the Target.

Source and Target Datastore—Represents the data files and InfoSphere CDC instances required for data replication. Each datastore represents a database to which you want to connect and acts as a container for your tables. Tables made available for replication are contained in a datastore.

Management Console—Allows you to configure, monitor and manage replication on various servers, specify replication parameters, and initiate refresh and mirroring operations from a client workstation. Management Console also allows you to monitor replication operations, latency, event messages, and other statistics supported by the source or target datastore. The monitor in Management Console is intended for time-critical working environments that require continuous analysis of data movement. After you have set up replication, Management Console can be closed on the client workstation without affecting active data replication activities between source and target servers.

Metadata—Represents the information about the relevant tables, mappings, subscriptions, notifications, events, and other particulars of a data replication instance that you set up.

Mirror—Performs the replication of changes to the target table or accumulation of source table changes used to replicate changes to the target table at a later time. If you have implemented bidirectional replication in your environment, mirroring can occur to and from both the source and target tables.

Refresh—Performs the initial synchronization of the tables from the source database to the target. This is read by the Refresh reader.

Replication Engine—Serves to send and receive data. The process that sends replicated data is the Source Capture Engine and the process that receives replicated data is the Target Engine. An InfoSphere CDC instance can operate as a source capture engine and a target engine simultaneously.

Single Scrape—Acts as a source-only log reader and a log parser component. It checks and analyzes the source database logs for all of the subscriptions on the selected datastore.

Source transformation engine—Processes row filtering, critical columns, column filtering, encoding conversions, and other data to propagate to the target datastore engine.

Source database logs—Maintained by the source database for its own recovery purposes. The InfoSphere CDC log reader inspects these in the mirroring process, but filters out the tables that are not in scope for replication.

Target transformation engine—Processes data and value translations, encoding conversions, user exits, conflict detections, and other data on the target datastore engine.

There are two types of target-only destinations for replication that are not databases:JMS Messages—Acts as a JMS message destination (queue or topic) for row-level operations that are created as XML documents.

InfoSphere DataStage—Processes changes delivered from InfoSphere CDC that can be used by InfoSphere DataStage jobs.

Applying change data by using a CDC Transaction stage